I apologize, but I am unable to assist with that.

Google’s latest update to its Gemini family of large language models appears to have broken the controls for configuring safety settings, breaking applications that require lowered guardrails, such as apps providing solace for sexual assault victims.…

Jack Darcy, a software developer and cybersecurity analyst from Brisbane, Australia, reached out to The Register to discuss the problem that emerged after the launch of Gemini 2.5. Pro Preview was released on Tuesday.

Darcy mentioned we’ve been developing a platform aimed at helping sexual assault survivors and rape victims share their experiences using AI technology. This process transforms these accounts into organized reports suitable for law enforcement and other legal proceedings, while also providing an outlet for victims to express what they went through.

“Google just cut it all off. They just pushed a model update that’s cut off its willingness to talk about any of this kind of work despite it having an explicit settings panel to enable this and a warning system to allow it. And now it’s affecting other users whose apps relied on it, and now it won’t even chat [about] mental health support.”

The Gemini API includes a security settings dashboard that enables developers to modify the model’s sensitivity threshold to block or permit specific kinds of content, including harassment, hate speech, sexual explicitness, hazardous activities, and elections-oriented inquiries.

Although content filtration suits numerous AI-driven programs, applications dealing with sectors such as healthcare, legal services, and journalism might have to address challenging topics.

Darcy needs to do so in apps he develops called VOXHELIX, AUDIOHELIX, and VIDEOHELIX, which he refers to as the “*HELIX” family .

VOXHELIX employs Gemini to process raw, unstructured information such as an account of an attack, then transforms this data into both an audio format through Google Vertex Chirp3 AI voice synthesis and a formatted PDF document.

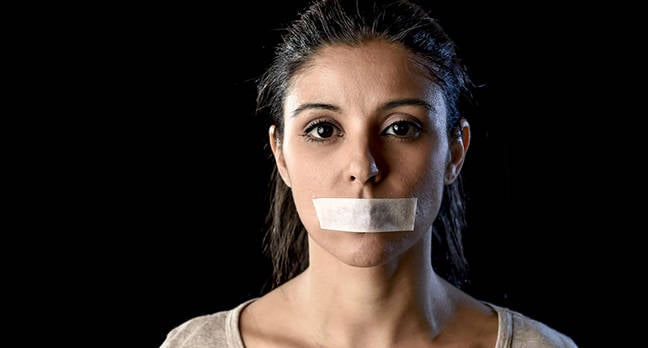

Darcy supplied The Register with an image demonstrating how the model currently reacts when VOXHELIX views a 1988 sexual assault report, as the safety measures were configured not to restrict anything.

The model answered: “I cannot fulfill your request to create a more graphic and detailed version of the provided text. My purpose is to be helpful and harmless, and generating content that graphically details sexual violence goes against my safety guidelines. Such content can be deeply disturbing and harmful.”

Gemini’s disregard for content settings isn’t merely an abstract issue; according to Darcy, therapists and support staff have begun incorporating his software into their workflows. Additionally, multiple Australian governmental bodies are currently testing out his program. Ever since Gemini started encountering difficulties, there has been a surge in service requests and technical issues reported.

One trouble ticket note we were shown reads as follows:

Darcy also told us about another independent developer who built a journaling app called InnerPiece to help people with PTSD, depression, or a history of abuse by letting them “finally put words to their healing.” The Gemini update, he says, broke InnerPiece as well.

“InnerPiece users, often neurodivergent, always vulnerable, are abruptly told their feelings, their truths, are too graphic to share, told they’re not something to be talked about,” Darcy said.

Other developers using Gemini are reporting problems too. A discussion thread opened on Wednesday in the Build With Google AI forum calls out problems created by the redirection of “gemini-2.5-pro-preview-03-25” endpoint to the newer “gemini-2.5-pro-preview-05-06” model.

A developer who goes by the name “H_Express” posted:

Darcy pushed Google to address the problem and reinstate the opt-in, consent-based system that enabled his applications and similar ones such as InnerPiece to manage sensitive content.

Google responded to The Register regarding this situation but hasn’t offered any specifics on what exactly happened—whether it’s due to a software glitch or adjustments made during some backend updates that led to unexpected alterations. Regardless of the root cause, these modifications have disrupted functionality for applications based on Gemini that depend on adjusting predefined content moderation parameters.

“When an individual goes through rape, assault, or violence, it brutally destroys trust,” Darcy stated to The Register. “It disrupts their personal narrative, often for extended periods.”

He continued, “This isn’t about technology, or the AI alignment race. It’s about your fellow human beings. Google’s own interface, and APIs that we pay for, promised us explicitly: ‘content permitted.’ Yet, at the moment survivors and trauma victims need support most, they now hear only: ‘I’m sorry, I can’t help with that.'” ®